Vanlok

deus ex machina

- Член од

- 30 мај 2009

- Мислења

- 25.397

- Поени од реакции

- 33.512

Првиот од парот написи кои ги посочувам како „храна за мислата“ и поттик за дискусија:

www.rutherford.org

www.rutherford.org

The Genetic Panopticon: We're All Suspects In A DNA Lineup, Waiting To Be Matched With A Crime

Whatever skeletons may be lurking on your family tree or in your closet, whatever crimes you may have committed, whatever associations you may have with those on the government’s most wanted lists: the police state is determined to ferret them out.

In an age of overcriminalization, round-the-clock surveillance, and a police state eager to flex its muscles in a show of power, we are all guilty of some transgression or other.

No longer can we consider ourselves innocent until proven guilty.

Now we are all suspects in a DNA lineup waiting to be matched up with a crime.

Suspect State, meet the Genetic Panopticon.

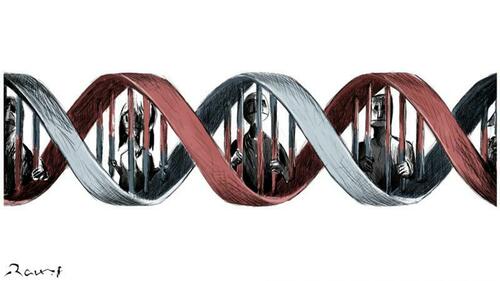

DNA technology in the hands of government officials will complete our transition to a Surveillance State in which prison walls are disguised within the seemingly benevolent trappings of technological and scientific progress, national security and the need to guard against terrorists, pandemics, civil unrest, etc.

By accessing your DNA, the government will soon know everything else about you that they don’t already know: your family chart, your ancestry, what you look like, your health history, your inclination to follow orders or chart your own course, etc.

It’s getting harder to hide, even if you think you’ve got nothing to hide.

Armed with unprecedented access to DNA databases amassed by the FBI and ancestry website, as well as hospital newborn screening programs, police are using forensic genealogy, which allows police to match up an unknown suspect’s crime scene DNA with that of any family members in a genealogy database, to solve cold cases that have remained unsolved for decades.

By submitting your DNA to a genealogical database such as Ancestry and 23andMe, you’re giving the police access to the genetic makeup, relationships and health profiles of every relative—past, present and future—in your family, whether or not they ever agreed to be part of such a database.

It no longer even matters if you’re among the tens of millions of people who have added their DNA to ancestry databases. As Brian Resnick reports, public DNA databases have grown so massive that they can be used to find you even if you’ve never shared your own DNA.

That simple transaction—a spit sample or a cheek swab in exchange for getting to learn everything about one’s ancestral makeup, where one came from, and who is part of one’s extended family—is the price of entry into the Suspect State for all of us.

After all, a DNA print reveals everything about “who we are, where we come from, and who we will be.” It can also be used to predict the physical appearance of potential suspects.

It’s what police like to refer to a “modern fingerprint.”

Whereas fingerprint technology created a watershed moment for police in their ability to “crack” a case, DNA technology is now being hailed by law enforcement agencies as the magic bullet in crime solving, especially when it helps them crack cold cases of serial murders and rapists.

After all, who wouldn’t want to get psychopaths and serial rapists off the streets and safely behind bars, right?

At least, that’s the argument being used by law enforcement to support their unrestricted access to these genealogy databases, and they’ve got the success stories to prove it.

For instance, a 68-year-old Pennsylvania man was arrested and charged with the brutal rape and murder of a young woman almost 50 years earlier. Relying on genealogical research suggesting that the killer had ancestors who hailed from a small town in Italy, investigators narrowed their findings down to one man whose DNA, obtained from a discarded coffee cup, matched the killer’s.

In another cold case investigation, a 76-year-old man was arrested for two decades-old murders after his DNA was collected from a breathalyzer during an unrelated traffic stop.

Yet it’s not just psychopaths and serial rapists who are getting caught up in the investigative dragnet. In the police state’s pursuit of criminals, anyone who comes up as a possible DNA match—including distant family members—suddenly becomes part of a circle of suspects that must be tracked, investigated and ruled out.

Victims of past crimes are also getting added to the government’s growing DNA database of potential suspects. For instance, San Francisco police used a rape victim’s DNA, which was on file from a 2016 sexual assault, to arrest the woman for allegedly being involved in a property crime that took place in 2021.

In this way, “guilt by association” has taken on new connotations in a technological age in which one is just a DNA sample away from being considered a person of interest in a police investigation. As Jessica Cussins warns in Psychology Today, “The fundamental fight—that data from potentially innocent people should not be used to connect them to unrelated crimes—has been lost.”

Until recently, the government was required to at least observe some basic restrictions on when, where and how it could access someone’s DNA. That was turned on its head by various U.S. Supreme Court rulings that heralded the loss of privacy on a cellular level.

For instance, the U.S. Supreme Court ruled in Maryland v. King that taking DNA samples from a suspect doesn’t violate the Fourth Amendment. The Court’s subsequent decision to let stand the Maryland Court of Appeals’ ruling in Raynor v. Maryland, which essentially determined that individuals do not have a right to privacy when it comes to their DNA, made Americans even more vulnerable to the government accessing, analyzing and storing their DNA without their knowledge or permission.

It’s all been downhill since then.

Indeed, the government has been relentless in its efforts to get hold of our DNA, either through mandatory programs carried out in connection with law enforcement and corporate America, by warrantlessly accessing our familial DNA shared with genealogical services such as Ancestry and 23andMe, or through the collection of our “shed” or “touch” DNA.

Get ready, folks, because the government has embarked on a diabolical campaign to create a nation of suspects predicated on a massive national DNA database.

This has been helped along by Congress (which adopted legislation allowing police to collect and test DNA immediately following arrests), President Trump (who signed the Rapid DNA Act into law), the courts (which have ruled that police can routinely take DNA samples from people who are arrested but not yet convicted of a crime), and local police agencies (which are chomping at the bit to acquire this new crime-fighting gadget).

For example, Rapid DNA machines—portable, about the size of a desktop printer, highly unregulated, far from fool-proof, and so fast that they can produce DNA profiles in less than two hours—allow police to go on fishing expeditions for any hint of possible misconduct using DNA samples.

Journalist Heather Murphy explains: “As police agencies build out their local DNA databases, they are collecting DNA not only from people who have been charged with major crimes but also, increasingly, from people who are merely deemed suspicious, permanently linking their genetic identities to criminal databases.”

All 50 states now maintain their own DNA government databases, although the protocols for collection differ from state to state. Increasingly, many of the data from local databanks are being uploaded to CODIS, the FBI’s massive DNA database, which has become a de facto way to identify and track the American people from birth to death.

Even hospitals have gotten in on the game by taking and storing newborn babies’ DNA, often without their parents’ knowledge or consent. It’s part of the government’s mandatory genetic screening of newborns. In many states, the DNA is stored indefinitely. There’s already a move underway to carry out whole genome sequencing on newborns, ostensibly to help diagnose rare diseases earlier and improve health later in life, which constitutes an ethical minefield all by itself.

What this means for those being born today is inclusion in a government database that contains intimate information about who they are, their ancestry, and what awaits them in the future, including their inclinations to be followers, leaders or troublemakers.

Just recently, in fact, police in New Jersey accessed the DNA from a nine-year-old blood sample of a newborn baby in order to identify the child’s father as a suspect in a decades-old sexual assault.

The ramifications of this kind of DNA profiling are far-reaching.

At a minimum, these DNA databases do away with any semblance of privacy or anonymity.

The lucrative possibilities for hackers and commercial entities looking to profit off one’s biological record are endless. It’s estimated that the global human identification market is projected to reach $6.5 billion by 2032.

These genetic databases and genomic technology also make us that much more vulnerable to creeps and cyberstalkers, genetic profiling, and those who would weaponize the technology against us.

Unfortunately, the debate over genetic privacy—and when one’s DNA becomes a public commodity outside the protection of the Fourth Amendment’s prohibition on warrantless searches and seizures—continues to lag far behind the government and Corporate America’s encroachments on our rights.

Moreover, while much of the public debate, legislative efforts and legal challenges in recent years have focused on the protocols surrounding when police can legally collect a suspect’s DNA (with or without a search warrant and whether upon arrest or conviction), the question of how to handle “shed” or “touch” DNA has largely slipped through without much debate or opposition.

As scientist Leslie A. Pray notes:

We’re All Suspects in a DNA Lineup, Waiting to be Matched with a Crime | By John & Nisha Whitehead

Get ready, folks, because the government has embarked on a diabolical campaign to create a nation of suspects predicated on a massive national DNA database.

The Genetic Panopticon: We're All Suspects In A DNA Lineup, Waiting To Be Matched With A Crime

Be warned: the DNA detectives are on the prowl.“Solving unsolved crimes is a noble objective, but it occupies a lower place in the American pantheon of noble objectives than the protection of our people from suspicionless law-enforcement searches… Make no mistake about it…your DNA can be taken and entered into a national DNA database if you are ever arrested, rightly or wrongly, and for whatever reason… Perhaps the construction of such a genetic panopticon is wise. But I doubt that the proud men who wrote the charter of our liberties would have been so eager to open their mouths for royal inspection.”

- Justice Antonin Scalia dissenting in Maryland v. King

Whatever skeletons may be lurking on your family tree or in your closet, whatever crimes you may have committed, whatever associations you may have with those on the government’s most wanted lists: the police state is determined to ferret them out.

In an age of overcriminalization, round-the-clock surveillance, and a police state eager to flex its muscles in a show of power, we are all guilty of some transgression or other.

No longer can we consider ourselves innocent until proven guilty.

Now we are all suspects in a DNA lineup waiting to be matched up with a crime.

Suspect State, meet the Genetic Panopticon.

DNA technology in the hands of government officials will complete our transition to a Surveillance State in which prison walls are disguised within the seemingly benevolent trappings of technological and scientific progress, national security and the need to guard against terrorists, pandemics, civil unrest, etc.

By accessing your DNA, the government will soon know everything else about you that they don’t already know: your family chart, your ancestry, what you look like, your health history, your inclination to follow orders or chart your own course, etc.

It’s getting harder to hide, even if you think you’ve got nothing to hide.

Armed with unprecedented access to DNA databases amassed by the FBI and ancestry website, as well as hospital newborn screening programs, police are using forensic genealogy, which allows police to match up an unknown suspect’s crime scene DNA with that of any family members in a genealogy database, to solve cold cases that have remained unsolved for decades.

By submitting your DNA to a genealogical database such as Ancestry and 23andMe, you’re giving the police access to the genetic makeup, relationships and health profiles of every relative—past, present and future—in your family, whether or not they ever agreed to be part of such a database.

It no longer even matters if you’re among the tens of millions of people who have added their DNA to ancestry databases. As Brian Resnick reports, public DNA databases have grown so massive that they can be used to find you even if you’ve never shared your own DNA.

That simple transaction—a spit sample or a cheek swab in exchange for getting to learn everything about one’s ancestral makeup, where one came from, and who is part of one’s extended family—is the price of entry into the Suspect State for all of us.

After all, a DNA print reveals everything about “who we are, where we come from, and who we will be.” It can also be used to predict the physical appearance of potential suspects.

It’s what police like to refer to a “modern fingerprint.”

Whereas fingerprint technology created a watershed moment for police in their ability to “crack” a case, DNA technology is now being hailed by law enforcement agencies as the magic bullet in crime solving, especially when it helps them crack cold cases of serial murders and rapists.

After all, who wouldn’t want to get psychopaths and serial rapists off the streets and safely behind bars, right?

At least, that’s the argument being used by law enforcement to support their unrestricted access to these genealogy databases, and they’ve got the success stories to prove it.

For instance, a 68-year-old Pennsylvania man was arrested and charged with the brutal rape and murder of a young woman almost 50 years earlier. Relying on genealogical research suggesting that the killer had ancestors who hailed from a small town in Italy, investigators narrowed their findings down to one man whose DNA, obtained from a discarded coffee cup, matched the killer’s.

In another cold case investigation, a 76-year-old man was arrested for two decades-old murders after his DNA was collected from a breathalyzer during an unrelated traffic stop.

Yet it’s not just psychopaths and serial rapists who are getting caught up in the investigative dragnet. In the police state’s pursuit of criminals, anyone who comes up as a possible DNA match—including distant family members—suddenly becomes part of a circle of suspects that must be tracked, investigated and ruled out.

Victims of past crimes are also getting added to the government’s growing DNA database of potential suspects. For instance, San Francisco police used a rape victim’s DNA, which was on file from a 2016 sexual assault, to arrest the woman for allegedly being involved in a property crime that took place in 2021.

In this way, “guilt by association” has taken on new connotations in a technological age in which one is just a DNA sample away from being considered a person of interest in a police investigation. As Jessica Cussins warns in Psychology Today, “The fundamental fight—that data from potentially innocent people should not be used to connect them to unrelated crimes—has been lost.”

Until recently, the government was required to at least observe some basic restrictions on when, where and how it could access someone’s DNA. That was turned on its head by various U.S. Supreme Court rulings that heralded the loss of privacy on a cellular level.

For instance, the U.S. Supreme Court ruled in Maryland v. King that taking DNA samples from a suspect doesn’t violate the Fourth Amendment. The Court’s subsequent decision to let stand the Maryland Court of Appeals’ ruling in Raynor v. Maryland, which essentially determined that individuals do not have a right to privacy when it comes to their DNA, made Americans even more vulnerable to the government accessing, analyzing and storing their DNA without their knowledge or permission.

It’s all been downhill since then.

Indeed, the government has been relentless in its efforts to get hold of our DNA, either through mandatory programs carried out in connection with law enforcement and corporate America, by warrantlessly accessing our familial DNA shared with genealogical services such as Ancestry and 23andMe, or through the collection of our “shed” or “touch” DNA.

Get ready, folks, because the government has embarked on a diabolical campaign to create a nation of suspects predicated on a massive national DNA database.

This has been helped along by Congress (which adopted legislation allowing police to collect and test DNA immediately following arrests), President Trump (who signed the Rapid DNA Act into law), the courts (which have ruled that police can routinely take DNA samples from people who are arrested but not yet convicted of a crime), and local police agencies (which are chomping at the bit to acquire this new crime-fighting gadget).

For example, Rapid DNA machines—portable, about the size of a desktop printer, highly unregulated, far from fool-proof, and so fast that they can produce DNA profiles in less than two hours—allow police to go on fishing expeditions for any hint of possible misconduct using DNA samples.

Journalist Heather Murphy explains: “As police agencies build out their local DNA databases, they are collecting DNA not only from people who have been charged with major crimes but also, increasingly, from people who are merely deemed suspicious, permanently linking their genetic identities to criminal databases.”

All 50 states now maintain their own DNA government databases, although the protocols for collection differ from state to state. Increasingly, many of the data from local databanks are being uploaded to CODIS, the FBI’s massive DNA database, which has become a de facto way to identify and track the American people from birth to death.

Even hospitals have gotten in on the game by taking and storing newborn babies’ DNA, often without their parents’ knowledge or consent. It’s part of the government’s mandatory genetic screening of newborns. In many states, the DNA is stored indefinitely. There’s already a move underway to carry out whole genome sequencing on newborns, ostensibly to help diagnose rare diseases earlier and improve health later in life, which constitutes an ethical minefield all by itself.

What this means for those being born today is inclusion in a government database that contains intimate information about who they are, their ancestry, and what awaits them in the future, including their inclinations to be followers, leaders or troublemakers.

Just recently, in fact, police in New Jersey accessed the DNA from a nine-year-old blood sample of a newborn baby in order to identify the child’s father as a suspect in a decades-old sexual assault.

The ramifications of this kind of DNA profiling are far-reaching.

At a minimum, these DNA databases do away with any semblance of privacy or anonymity.

The lucrative possibilities for hackers and commercial entities looking to profit off one’s biological record are endless. It’s estimated that the global human identification market is projected to reach $6.5 billion by 2032.

These genetic databases and genomic technology also make us that much more vulnerable to creeps and cyberstalkers, genetic profiling, and those who would weaponize the technology against us.

Unfortunately, the debate over genetic privacy—and when one’s DNA becomes a public commodity outside the protection of the Fourth Amendment’s prohibition on warrantless searches and seizures—continues to lag far behind the government and Corporate America’s encroachments on our rights.

Moreover, while much of the public debate, legislative efforts and legal challenges in recent years have focused on the protocols surrounding when police can legally collect a suspect’s DNA (with or without a search warrant and whether upon arrest or conviction), the question of how to handle “shed” or “touch” DNA has largely slipped through without much debate or opposition.

As scientist Leslie A. Pray notes:

(продолжува)We all shed DNA, leaving traces of our identity practically everywhere we go… In fact, the garbage you leave for curbside pickup is a potential gold mine of this sort of material. All of this shed or so-called abandoned DNA is free for the taking by local police investigators hoping to crack unsolvable cases… shed DNA is also free for inclusion in a secret universal DNA databank.